Image Source: ChatGPT interface

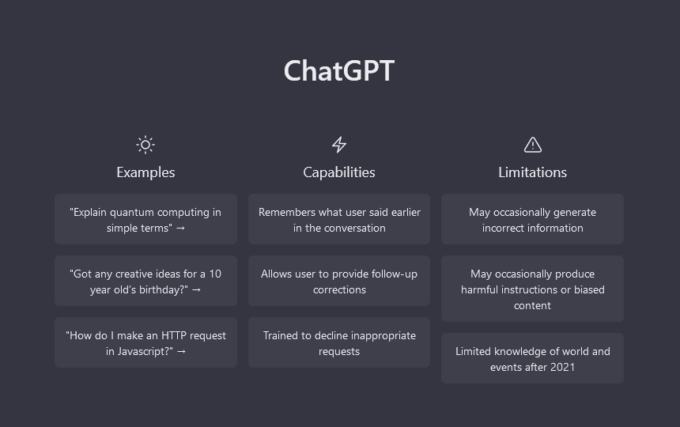

By early 2023, the advent of an algorithm-based website called OpenAI.com and its ChatGPT had triggerd a global education crisis. Teachers around the world feared that it is a homework- and exam-writing machine that allows students in schools and universities to use the Internet for their assignments. The global fear was that a machine would do it for them.

With this new tool, students can safely leave the writing of entire essays – even books – to artificial intelligence. Not surprisingly, the idea caught on. ChatGPT subscribers reached 10 million in just two months. Even more impressive, the online platform crossed one million users in just five days of its launch. Beyond all that, it also sets a record for being the fastest-growing platform ever – gaining a whopping 100 million users by January 2023.

Perhaps because of its phenomenal growth rate, critics started to dread that something central to human personality development might be lost as writing –and this is not just for school homework – would now be done by an algorithmic system.

The first training video for ChatGPT arrived on Tobias’ smartphone – a German highschool student – at the turn of the year. By early 2023, Tobias received one TikTok clip after another. These Tiktok videos were telling him how to use ChatGPT. In addition to girlfriend and makeup advice, dance perfections, and silly animal videos, it presented ChatGPT as a rather ingenious invention.

Whether at his German school or online, someone was constantly telling Tobias how easy it was to do his homework with ChatGPT, and how it would turn his entire school life upside down in a matter of minutes. Soon, Tobias found himself trying the new toy for himself.

Since ChatGPT was launched at the end of November 2022, discussions on German online platforms have often revolved around a language system based on artificial intelligence (AI). Soon, Germany’s quality press – Der Spiegel, Zeit, TAZ, etc. – all made AI a topic.

What AI can already do, where AI is already being used, when will AI take over our work, and has it passed the Turing Test? are all important questions – not only for German readers and users. Simultaneously, we are plastered with new “expert” opinions every week. Perhaps AI can write such columns itself. Well, it has not written this one – so far!

Many experts claim that ChatGPT – and even more so ChatGPT-4 at $20.- a month – can answer complex questions, give explanations and tips, help with travel planning just as quickly as writing a computer code, or a new theatre play.

Simple, just enter your question and copy the answer. This is totally easy to do – particularly for those who are lazy or don’t have the time.

OpenAI’s ChatGPT (GPT = general purpose technology) also helps Tobias with basic learning materials – particularly when he had not enough time left during the lessons at his local German school. As a result, AI has become the standard tool for the 17-year-old German boy.

If the answer isn’t good enough, Tobias simply inserts, please explain this in more detail, or Tobias asks the same question but framed differently. Already, Tobias gets new and more detailed texts helping him with his learning.

Is this cheating?

Reactions to ChatGPT are, at times, filled with enthusiasm, while at other times, there are serious concerns. Yet, some have known that our world could have been overwhelmed by AI decades ago. In 1997, a computer called Deep Blue defeated the world champion in chess – a significant step for computing and perhaps also for human society.

For quite some time, GPS-guided algorithms have helped us with driving. On the downside, algorithms recommend – some say manipulate – people through so-called “personalized” preferences, such as what books and music to buy and even what movies to watch. It also plasters us with an endless array of bargains.

But we are really starting to get off the rails when an artificial intelligence starts drawing crazy pictures on demand and is chatting with us. Much of this is fascinating yet scary at the same time.

ChatGPT can indeed be scary, especially for schools (read: teachers) and universities (read: professors). Where essay writing is supposed to train a personality, promote critical thinking, and educate young people to become responsible citizens, students are starting to leave writing to a machine. Sociologists would call this: structure (algorithm) replaces agency (us).

If the very educational step of conceptualizing and writing of a school or university essay, for example, is taken over by an algorithmic-guided computer, something very central and personal can be lost. And this is even before getting into the sophisticated and intellectually demanding “form of the essay”, as German philosopher Adorno calls it.

Meanwhile, Tobias was caught by his teacher. But what does getting caught actually mean? Why shouldn’t Tobias use ChatGPT? In the end, it is not that much different from using Google. After all, Tobias was looking for a topic for his history assignment. He found it by using AI and he was in a hurry.

The homework had something to do with constitutional institutions of Germany’s federal council – the Bundesrat. Unsurprisingly, the German version of ChatGPT – ChatopenAI.de – provided quite good answers. But then Tobias’ teacher found out about it.

It was by coincidence, his teacher Herr Fritz said. Tobias had been playing with ChatGPT and received quite a lot of typical but also very suitable responses. Yet, most of Tobias’ sentences in the homework had a very similar tone. Worse, on balance, his answer did not really fit the specific questions issued by teacher Fritz.

Yet, the question remains, did Tobias do something forbidden? Did he deceive his teacher? Did he plagiarize? Herr Fritz was not too sure about all that either. In the end, teacher Fritz asked Tobias to give a talk about ChatGPT in class.

Most of the students in Tobias’ school had already heard about the program. By now, many were also using ChatGPT for their school assignments. Most in his class thought that ChatGPT is totally useful. From now on, it will become increasingly more difficult for German teachers to evaluate written achievements – or are those ChatGPT’s achievements?

The fact that computers generate continuous texts that we – increasingly – can no longer distinguish between those that people or machines have written is becoming a huge problem for teaching. ChatGPT is even said to have already passed a law exam at the University of Minnesota.

Even more interesting is the fact that ChatGPT has “easily” achieved the prescribed minimum score in a theoretical examination that medical doctors must pass in order to be allowed to practice in the USA.

Meanwhile in the EU, the Commission and the EU Parliament have been working on an Artificial Intelligence Act to set some basic rules for AI. So-called high-risk applications are to be restricted, such as, for example, facial recognition and the checking of someone’s credit worthiness.

Almost by definition, ChatGPT can carry high-risk activities. Its AI-generated pictures and texts can be attributed to a specific person. Obviously, someone would have to be responsible for things like these. Very easily, ChatGPT can spit out sexist, racist, manipulative content. And it can simply issue lies, falsehood, mis- and even disinformation.

Berlin-based company launches its own version

The arrival of ChatGPT in Germany is part of ChatGPT’s global wave. In just five days, it reached one million users worldwide. No other online service has risen so fast. Instagram took just under three months and Twitter, two years. The hype about ChatGPT surprised many experts because such language systems – e.g. GPTs like LaMDA on Google, and OPT-175B on Facebook, now LLaMA – have been around for a while – even if they are not yet freely usable.

At its most bacic level, GPT – Generative Pre-Trained Transformer – is a supposedly “neural” network whose connections run between computing nodes. As a result, the network learns – a bit like our brain does – minus our subjectivity and creatitivity, of course.

Artificial intelligence trains itself with massive amounts of text and data, such as, for example, information gained from online articles, online photos, previously save locations on the net, essays, blog entries, scientific papers, fiction, cooking recipes, and so on. In short, there are literally millions of texts from which ChatGPT “learns”. In other words, it is a bit like the McDonald’s of standardized food and not like the exquisite Joël Robuchon restaurant.

Unlike a creative Nobel Prize winner in literature, one might think of ChatGPT as something like locking a child in a library, where – over time – the child pieces together some sort of “meaning” from the texts available.

Worse, ChatGPT works exclusively via algorithms. Which word follows which word is determined by algorithms. Which word sequences match grammatically and in terms of content is also determined by algorithms.

ChatGPT does not have something like what human beings simply call intelligence – and this is not even considering Gardner’s nine types of human intelligence. For us, human intelligence remains inextricably linked to subjectivity. ChatGPT does not – yet! – have something philosophy calls personhood.

Unlike human philosophy, personhood, subjectivity, and even human intelligence, artificial intelligence is – when boiled down to its most basic level – a rather simple thing. It operates with a self-improving statistical probability formula written as a computer code – an algorithm.

ChatGPT is based on learned statistical probabilities. Amazon is one of the masters on statistical probabilities – Amazon knows that you will order condoms before you even know it! And with that, it makes millions of dollars selling a huge range of items – including condoms in 811 versions.

Apply statistical probability not to condoms but to ChatGPT and it simply imitates human speech and generates a new text with each request. But – and here comes the key to it all – it does this based on what it finds.

In other words ChatGPT simply cannot – yet! – write a Nobel Prize winning novel. ChatGPT is no Bob Dylan, Svetlana Alexievich, Doris Lessing, the superb Harold Pinter and the even more superb Elfriede Jelinek, Günter Grass, Dario Fo, the magnificent Gabriel García Márquez, etc.

For ChatGPT to work, the existing language models had only to be garnished with a new function – algorithms. While not being a literature genius like Thomas Mann, ChatGPT makes it easy to use the program and also brings fun into the game. The rather conservative Thomas Mann wasn’t really that funny as he was brilliant and creative.

So everyone can prompt ChatGPT, that is, make a request to the program. With that easiness, the inventors of ChatGPT were able to unleash their little monster on the whole world. Since then, ChatGPT further trained and improved itself by the prompts of currently well over 100 million people.

The fact that this data is going through the internet in just seconds, makes ChatGPT the big thing it is. For the first time, Google is not anticipating, but has to follow suit – hence forced into second place. Hastily, Google has released its own artificial language program called Bard.

It was pleagued with problems. Google’s AI chatbot Bard spreads a false information at its presentation, already. This caused the stock of Google’s parent company Alphabet to plummet. Meanwhile, China’s search engine Baidu was responding with its chatbot Ernie.

Ernie and Bard – not a Sesame Street joke. And as one might imagine, there is also a German version. Yes, it was created by a Heidelberg-based company called Aleph Alpha – almost at equal footing when it comes to AI.

Meanwhile, other German companies were still using GPT3 for their products. In their version, everyone can integrate an AI voice into their software free of charge. One of these German companies was Mind-Verse from Berlin. It’s 25-year-old boss – Noel Lorenz – was fascinated by GPT3 when it was still in its developing stage.

Their model was finally able to independently recognize patterns in data and create human-like texts. This was made possible because the AI was trained with a volume of data that was soon 1,500 times as large as that of the previous version.

Founded two years ago, Mind-Verse is now surfing on the successful wave of ChatGPT. Compared to other GPTs, Mind-Verse can speak better German. It is also more modern thanks to its live data from the Internet.

In addition to the chat function, Mind-Verse offers additional fine-tuning models that relate more specifically to the data records than with ChatGPT. The network only responds as well as it is asked. Therefore, Mind-Verse concretizes requests with its software.

One of its functions is designed to turn key points into continuous text. Another function is to write essays with arguments, advertising-effective headlines, and even compose song lyrics.

Yet, in one case, German AI attributed the poem Berliner Abend written by Paul Boldt wrongfully to Erich Kästner. Worse, some of the arguments of the machine are partly arch-conservative, partly unworldly.

A 10th grader said, she prefers ChatGPT while another student said, you have to remain skeptical so that you don’t make mistakes. Many German students think that for topics for which there is no right or wrong answer and when an argument becomes ethical, emotional, and personal, ChatGPT tends to draw a blank.

In any case, ChatGPT is a language machine – not a knowledge machine. In other words, the program often waffles on, it tends to be over-self-confident, and yet is habitually low and shallow on content. As a consequence, many Germany students think that a chatbot can have at least nine problems:

1) It uses empty words,

2) It uses bad sources such as blogs and even Germany’s lowgrade tabloids,

3) It tends to ignore direct quotes,

4) It does not read between the lines,

5) It always prefers to present something, even if it is nonsense, rather than say nothing,

6) It uses a lot of empty phrases,

7) It has a tendency towards the inflationarily twisting of foreign words,

8) It appears confident but this, repeatedly, comes with ignorance, and finally,

9) It gives the illusion of plausibility where there is none.

Nevertheless, another student called Max also works a lot with the language tool. When he is writing an essay, AI shortens the time dedicated to hard work so that he has more time for creative thinking. Max strongly rejects the idea that ChatGPT does all his homework and that he uses it out of pure laziness. Max says, it’s not that easy.

In May 2022, Max was asked to write a rather technical paper that is AI-supported. Max believes that the program will be a source of inspiration. For that, ChatGPT has already suggested good headlines and arguments which go beyond what Max could come up by himself.

For many students, using ChatGPT is still not that obvious. Most German students believe that using artificial intelligence for a thesis is “not” plagiarism. In one particular view on this, a person’s intellectual property would have to be stolen to make it plagiarism.

Yet there still is a difference between man and machine. However, it is nevertheless rather difficult to pass off an AI-made texts as your own intellectual property. This kind of potential power to deceive brings two additional problems:

1) Even now, there is still no tool that can accurately recognize AI content. Some argue that this will occur in the future as the language AI uses is constantly getting better and more human-like;

2) and all this raises the questions, where does deception begin? Is it a deception when entire AI-generated paragraphs are used? Is it a deception when using only a few words or just getting an idea? Is it a deceitful when just getting a text through a spelling program or when simply using a calculator?

Undeterred, Germany’s sixteen states – responsible for education – do not seem to be planning to ban ChatGPT. At Stuttgart’s Didacta education fair, participants agreed that AI language has too much potential to be banned.

Meanwhile, in the neighboring state of Hessen, its Ministry of Education emphasized that AI applications could support students individually in their learning process. Recently, North Rhine-Westphalia has published a guide on AI in schools. The guidelines do not prohibit working with AI-based text robots.

So far, nobody has written ChatGPT guidelines for schoolteachers. It seems that Germany’s approach might be described as, we see it coming, we know that we have to act – but we prefer to rid it out. On the other hand, is the more optimistic view that argues, AI will simplify a lot of work and replace jobs while simultaneously creating new jobs.

In other words, AI machines can take on the exhausting, monotonous tasks while people concentrate on creativity, meaningfulness, and togetherness. Yet, ChatGPT will have a strong impact on everyday university life. One might realize that ChatGPT programs can do some things well while elsewhere, it fails spectacularly.

It might be argued that strong students will be inspired by arguments produced by AI. On the other hand, ChatGPT might fundamentally change university teaching as well as the prevailing examination “culture”. Already, most students have to hand in their mobile phones before an exam. The university turns off WLAN and students are – literally – locked in a room then to come out after 90 minutes with an exam result.

Universities’ curricula – as increasingly set up by “corporate” apparatchiks and no longer by academics – have become competence-oriented testing regimes focusing on skills and practical implementation. AI can change that.

Potentially, artificial intelligence has the ability of rendering entire lessons obsolete simply because many basics could be accomplished rather easily by an AI program. This also means that professors no longer have to encourage their classes to do stupid tasks which the professors themselves have to correct – rather stupidly – afterwards.

The Digital Divide – Rich and Poor

Worse, ChatGPT and AI also have the potential to further what became known as “the divide”. The digital divide can exist between schools and universities. Both – AI and ChatGPT – have the ability to cement the digital divide.

+ between rich schools with good equipment and poor schools with poor equipment;

+ between the more powerful and weaker students; and

+ between the poor and rich particulary with paid premium access to ChatGPT.

All this applies not just to universities and schools. Today, we all encounter artificial intelligence. The difference is that some do it actively while others encounter it unconsciously. Some people have realized that they are being flooded with commercial, often useless, and even manipulative information – or a combination of all three.

Such a flooding is machine-driven based on artificial intelligence using what is euphemistically known as “persuasive technology”. In short, people are influenced.

In the end and given the stratospheric rise of ChatGPT and artificial intelligence, it will probably soon be normal for us to distinguish between AI/ChatGPT content and a human written texts – as long as we “are still able” to notice the difference.