Kevin Reed

The US military has issued a call for research proposals from technology partners for the development of an automated system capable of scanning the entire internet and locating and censoring content deemed as “false media assets” and “disinformation.” According to government documents, the requested solution would provide “innovative semantic technologies for analyzing media” that will help “identify, deter, and understand adversary disinformation campaigns.”

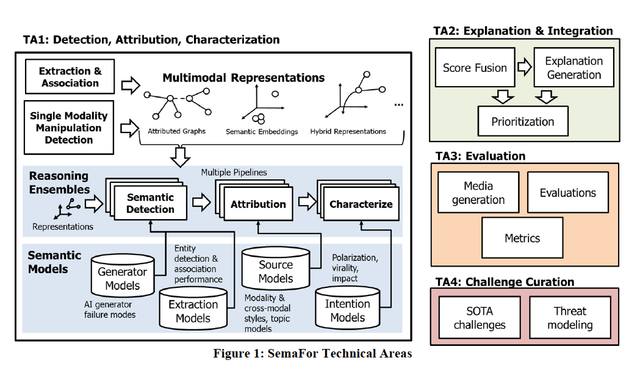

On August 23, the Defense Advanced Research Projects Agency (DARPA) issued a solicitation for a so-called Semantic Forensics (SemaFor) program on the federal government business opportunities website. According to the bid specifications, SemaFor “will develop technologies to automatically detect, attribute, and characterize falsified multi-modal media assets (text, audio, image, video) to defend against large-scale, automated disinformation attacks.”

In other words, the US Defense Department is seeking a technology partner that will build a platform to enable the Pentagon to locate any content it identifies as an adversarial “disinformation attack” and shut it down. This technology will cover anything on the internet including web pages, videos, photo and social media platforms.

The documents released as part of the DARPA request say that the technology and software algorithms it is seeking would “constitute advances to the state of the art” that will be top secret and do not include “information that is lawfully publicly available without restrictions.”

Diagram of the Semantic Forensics online censorship system sought by the Defense Department

Diagram of the Semantic Forensics online censorship system sought by the Defense Department

These advances would involve moving away from “statistical detection techniques” which are becoming “insufficient for detecting false media assets.” The use of intelligent semantic techniques—the ability for a program to analyze online content within context and determine its meaning and intent—requires the latest developments in artificial intelligence and so-called “neural networks” that have the ability to “learn” and improve performance over time. According to the US Defense Department, semantic analysis will be able to accurately identify inconsistencies within artificially generated content and thereby establish them as “false.”

There are three components to DARPA’s requested solution. The first is to “determine if media is generated or manipulated,” the second is that “attribution algorithms will infer if media originates from a particular organization or individual” and the third is to determine whether the media was “manipulated for malicious purposes.” Although the request makes reference to deterrence, there are no details about how the Pentagon intends to act upon the content it has identified as “false.”

No comments:

Post a Comment